- chapter 5 Body and Mind

- section 5.1 Whole beings

- section 5.2 Sensing ourselves

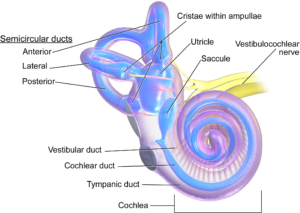

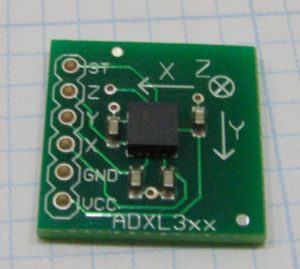

- figure 5.1 a) inner ear b) rollercoaster c) accelerometer

- section 5.3 The body shapes the mind — posture and emotion

- section 5.4 Cybernetics of the body

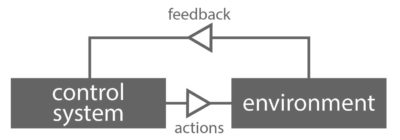

- figure 5.2 open loop control

- figure 5.3 closed loop control

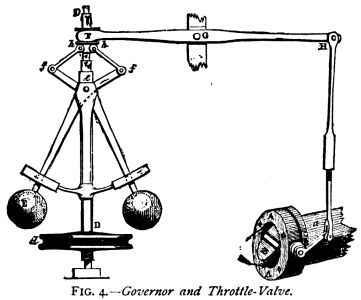

- figure 5.4 centrifugal governor

- figure 5.5 Dot to touch –- sense your finger movements

- figure 5.6 Series of hand movements towards target (from [Dx03]). Note, exaggerated distances: the first ballistic movement is typically up to 90% of the final distance.

- box 5.1 Forever cyborgs

- section 5.5 The adapted body

- figure 5.7 Game controllers in action

- section 5.6 Plans and action

- figure 5.8 Automatic actions at breakfast

- figure 5.9 HTA for making a mug of tea

- figure 5.10 types of activity (from [Dx08])

- box 5.2 Iconic case study: Nintendo Wii

- section 5.7 The embodied mind

5.1 Whole beings

It has already been hard to write about the body without talking about the mind (or at least brain) and perhaps even harder to talk about the mind without involving the body. We are not two separate beings, but one person where mind and body work together.

In the past the brain was seen as a sort of control centre where sensory information about the environment comes in and is interpreted, plans are made, then orders are sent out to the muscles and voice. However, this dualistic view loses a sense of integration and does not account for the full range of human experience. On the one hand, we sense not only the environment but our body as well, and in some ways we understand what we are thinking because of how we feel in our body. On the other hand, much of the loop between sensing and action happens subconsciously. In both cases the ‘little general in the head’ model falls apart.

While there is clearly some level of truth in the dualist ‘brain as control’ picture, more recent accounts stress instead the integrated nature of thought and action, focusing on our interaction with our environment.

5.2 Sensing ourselves

When we think of our senses, we normally think of the classic five: sight, hearing, touch, taste and smell. Psychologists call these exteroceptive senses as they tell us about the external world. However, we are also able to sense our own bodies, as when we have a stomach ache. These are called interoceptive senses. The nerves responsible for interoception lie within the body, but in fact some of these senses also tell us a lot about our physical position in the world.

We are all familiar with the way balance is aided by the semicircular canals in our ears. There are three channels, filled with fluid that is sensed by tiny hairs. This allows us to detect ‘up’ in three dimensions. Of course, if we spin or move too fast, perhaps on a fairground ride, the churning fluid makes us dizzy. A similar mechanism is used in mobile devices such as the iPhone: three accelerometers are placed at right angles, often embedded in a single chip (Fig. 5.1).

However, this is not the only way we know what way up we are. Our eyes see the horizon, and our feet feel pressure from the floor. Sea-sickness is caused largely because these different ways of knowing which way is up, and how you are moving, conflict with one another. This is also a problem in immersive virtual reality (VR) systems (see also Chapters 2 and 12). If you wear VR goggles or are in a VR CAVE (a small room with a virtual world projected around you), then the scene will change as you move your head, simulating moving your head in the real world. However, if the virtual world simulation is not fast enough and there is even a small lag, the effect is rather like being at sea when your legs and ears tell you one thing, and your eyes something else.

It is not quite correct to say we detect which way is up. Most of our senses work by detecting change. Our ear channels are best at detecting changes in direction, so when someone is diving, or buried in snow after an avalanche, it can be difficult to know which way is up. Because there is equal support all the way round and they can see no horizon, their brains are not able to sort out a precise direction. Divers are taught to watch the bubbles as they will go upwards, and avalanche survival training suggests spitting and trying to feel the way the spit dribbles down your face.

The physics of the world also comes into play. Newtonian physics is based on the fact that you cannot tell how fast you are going without looking at something stationary (see also Chapter 11). You may be travelling at a hundred miles an hour in a train, but while you look at things in the carriage you can easily feel that you are still. We even cope with acceleration. As we go round a sharp corner we simply stand at a slight angle. It is when the train changes from straight track into a curve or back again, that we notice the movement. Likewise, in a braking car so long as we brake evenly we simply brace our body slightly. It is the change in acceleration that we feel, what road and rail engineers call ‘jerk’ (see Box 11.3).

Again, in virtual reality the same thing happens. You are controlling your motion through the environment using a joystick or other controller and then stop suddenly. The effect can be nauseating. All is well so long as you are moving forward at a constant speed, or even accelerating or decelerating or cornering smoothly. Your ear channels and body sense cannot tell the difference between standing still and being on a uniformly moving or accelerating platform. However, if you ’stop’ in the VR environment, the visual image stops dead, but your body and ears make you feel you are still moving.

As well as having a sense of balance, we also know where parts of our body are. Close your eyes, then touch your nose with your finger — no problem, you ‘know’ where your arm is relative to your body and so can move it towards your nose. This sense of the location of your body, called proprioception, is based on various sensing nerves in your muscles, tendons and joints and is crucial to any physical activity. People who lose this ability due to disease find it very difficult to walk around or to grasp or hold things, even if they have full movement. They have to substitute looking at their limbs for knowing where they are and have to concentrate hard just to stay upright.

Proprioception is particularly important when using eyes-free devices, for example in a car where you can reach for a control without looking at it. In practice we do not use proprioception alone during such interactions, but also peripheral vision and touch. Indeed, James Gibson [Gi79] has argued that our vision and hearing should be regarded as serving a proprioceptive purpose as well as an exteroceptive one, because we are constantly positioning ourselves with respect to the world by virtue of what we see and hear.

5.3 The body shapes the mind — posture and emotion

It is reasonable that we need to sense our bodies to know if we have an upset stomach (and hence avoid eating until it is better), or to be able to reach for things without looking. However, it seems that our interoceptive senses do more than that, they do not just provide a form of ‘input’ to our thoughts and decisions, but shape our thoughts and emotions at a deep level.

You have probably heard of smile therapy: deliberately smiling in order to make yourself feel happier. Partly, this builds on the fact that when you smile other people tend to smile back, and if other people smile at you that makes you feel happy. But that is not the whole story. Experiments have shown that there is an effect even when there is no-one else to smile back at you and even when you don’t know you are smiling. In a typical experiment researchers ask subjects to sit while their faces are manipulated to either be smiling or sad. Although there is some debate about the level of efficacy, there appear to be measurable effects, so that even when the subjects are not able to identify the expression on their faces, their reports on how they feel show that having a ‘happy’ face makes them feel happier! [Ja84;Ma87]

This may seem odd, since it makes sense that unless we are hiding our emotions we look how we feel, but this suggests that we actually feel how we look! In fact, research on emotion suggests that higher-level emotion does often include this reflective quality. If your heart rate is racing, but something good is happening, then it must be very good. Indeed, romantic novels are full of descriptions of pounding hearts! Effectively some of how we feel is ‘in our heads’, but some is in our bodies. These can become ‘confused’ sometimes. Imagine a loud bang has frightened you and then you realise it is just a child who has burst a balloon. You might find yourself laughing hysterically. The situation is not really that funny, but the heightened sense of arousal generated by the fear is still there when you realise everything is fine and maybe a bit amusing. Your body is still aroused, so your brain interprets the combination as being VERY funny.

In the last chapter we discussed creativity and physical action, which is as much about the mind and body working together as about the mind alone. There are well-established links between mood and creativity, with positive moods on the whole tending to increase creativity compared with negative moods. One of the experimental measures used to quantify ‘creativity’ is to ask subjects to create lists of novel ideas; for example, ‘how many uses can you think of for a brick’. In one set of experiments the researchers asked subjects either to place their hands on the table in front of them and press downwards, or to put their hands under the table and press up. While pressing they were asked to perform various ‘idea list’ tasks. Those who pressed up generated more ideas than those who pressed down. The researchers’ interpretation was that the upward pressing made a positive ‘welcome’ gesture, which increased the creativity, whereas the pressing down was more like a ‘go away’ gesture. Again, body affects mind.

5.4 Cybernetics of the body

We have seen how the body can be regarded as a mechanical thing with levers (bones), pivots (joints) and pulleys (tendons). However, with our brains in control (taking the dualist view), we are more like what an engineer would regard as a ‘control system’ or ‘cybernetic system’. The study of controlled systems goes back many hundreds of years to the design of clocks and later the steam engine.

There are two main classes of such systems. The simplest are open loop control systems (fig. 5.2). In these the controller performs actions on the environment according to some setting, process or algorithm. For example, you turn a simple electric fire on to keep warm and it generates the exact amount of heat according to the setting.

This form of control system works well when the environment and indeed the operation of the control system itself are predictable. However, open loop control systems are fragile. If the environment is not quite as expected (perhaps the room gets too hot) they fail.

More robust control systems use some sort of feedback from the environment to determine how to act to achieve a desired state; this is called closed loop control (fig. 5.3). An electric fire may have a temperature sensor, so instead of turning it on and off to produce a predetermined heat, you set a desired temperature and the fire turns on if it is below the temperature and off if it is above.

One of the earliest explicit uses of closed loop control was the centrifugal governor on steam boilers (fig. 5.4). Escaping steam from the boiler is routed so that it spins a small ‘merry go round’ arrangement of two heavy balls. As the balls swing faster they rise in the air. However, the balls are also linked to a valve, so as they rise they open the valve, releasing steam. If the pressure is too high it makes the balls spin faster, which opens the valve and reduces the pressure. If the pressure is too low, the balls do not spin much, the valve closes and the pressure increases.

This is an example of negative feedback, which tends to lead to stable states. It is also possible to have positive feedback, where a small change from the central state leads to more and more change. Imagine if the universal governor were altered so that the rising balls closed the valve and vice versa. In nature, positive feedback often leads to catastrophes such as an avalanche, when small amounts of moving snow make more and more snow move.

Box on +ve/-ve feedback qualitative impact: shape, clouds vs. bubbles, spinning coin, group decisionsAt first positive feedback may not seem very useful. However, it can be used to produce very rapid ‘hair trigger’ responses. In our bodies we find a mixture of different kinds of control mechanism. For example, the immune system uses positive feedback loops to produce different kinds of cells in sufficient quantity to fight infection or to create antibodies. In a healthy immune system there is also a negative feedback loop to regulate production of these kinds of cells before they start to attack the body itself. In an auto-immune disease, this balancing system has failed.

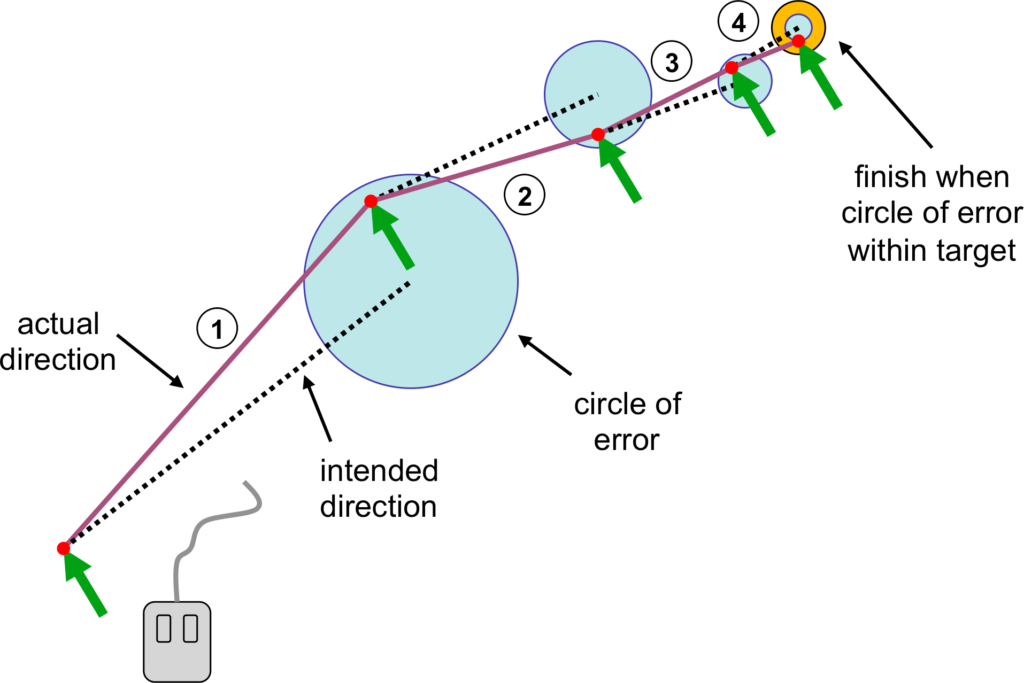

When we want to position something we use hand–eye coordination, seeing where our hands are and then adjusting their position until it is right. Look at the dot in Figure 5.5, then reach your finger out to touch it. You may be able to notice yourself slow down as you get close and make minor adjustments to your finger position. That is closed loop control using negative feedback.

Of course, recall that our bodies are like networked systems with delays between eye and action of around 200ms (Chapter 3). As these minor adjustments depend on the feedback from eye to muscle movement, we can only manage about 5 adjustments per second.

These time delays are one of the explanations for Fitts’ Law a psychological result discovered by Paul Fitts in the 1950s. Fitts drew two parallel target strips some distance apart on a table. His subjects were asked to tap the first target, then the second, then back to the first repeatedly as fast as they could [Fi54].

He found that the subjects took longer to move between narrower targets, not surprising as it is harder to position your finger or a pointer on a smaller target. He also found that placing the targets further apart had the same effect, again not surprising as the arm has to move further.

However, what was surprising was the way these two effects precisely cancelled out: if you made the targets twice as big, and placed them twice as far away, the average time taken was the same. All that mattered was the ratio between them; in mathematical terms it was scale invariant.

In addition, one might think that for a given target size the time would increase linearly, so that each 10cm moved would take a roughly similar time, just like driving down a road, or walking. However, Fitts found instead that time increased logarithmically: the difference between moving 10cm and 20cm was the same as the difference between moving 20 cm and 40 cm — when the targets got further apart the subjects moved their arms faster. This gave rise to what is known as Fitts’ Law

time = a + b * log2( distance / size)

Fitts called the log ratio the ‘index of difficulty’ (ID).

ID = log2( distance / size )

Fitts’ Law has proved very influential in human–computer interaction. The precise details of the formula vary between different sources, as there are variants for different shaped targets, and for whether you measure distance to the centre or edge of a target and size by diameter or radius. However, it appears to hold equally both when a mouse is used to move a pointer on the screen to hit an icon, and when moving your hand to hit a strip. Each device has different constants ‘a’ and ‘b’ depending on their difficulty, and individuals vary, but the underlying formula is robust. Further studies have shown that it also works when ‘acceleration’ is added to the mouse, varying the relationship between mouse and pointer speed, or when other complications are added. The formula has even been embodied in an ISO standard (ISO 9241-9) for comparing mice and similar pointing devices [IS00].

When Fitts was doing his experiments, Shannon had recently finished his work on measuring information [Sh48;SW63], where log terms are also used. This led Fitts to describe his results in terms of the information capacity of the motor system. He measured the index of difficulty in ‘bits’ and when ‘b’ is ‘throughput’ (1/b) gives the information capacity of the channel in bits per second, just like measuring the speed of a computer network.

An alternative (and complementary) account can be expressed in terms of the corrective movements and delays described in Chapter 3: that is, a cybernetic model of the closed loop control system.

Our muscles are powerful enough to move nearly full range in a single ‘hand–eye’ feedback time of 200ms. So when you see the target, your brain ‘tells’ your hand to move to the seen location. However, your muscle movement is not perfect and you don’t quite hit the target on the first try, you just get closer. Your brain sees the difference and tells your arms to move the smaller distance, and so on until you are within the target, and you have finished. If you assume that the movement error for large movements is proportionately larger, then the ‘log’ law results.

The ‘information capacity’ of the motor system measured in bits/second is generated by a combination of the accuracy of your muscles and the delays in your nerves -– the physical constraints of your body translated into digital terms.

Fig. 5.6 Series of hand movements towards target (from [Dx03]). Note, exaggerated distances: the first ballistic movement is typically up to 90% of the final distance.

Box 5.1 Forever cyborgs

We saw in Chapter 3 that prosthetics, including the simple walking stick, are very old. More fundamentally, hominid tool use pre-dates homo sapiens by several million years — from its very beginning, our species has used tools to augment our bodies. This is particularly evident in Fitts’ Law, which is often seen as a fundamental psychological law of the human body and mind.

Draw a small cross on a piece of paper and put it on a table in front of you. Put your hands on your lap, look at the cross and then, with your eyes closed, try to put your hand over the cross. Move the paper around and try again. So long as it is in reach you will probably find you can cover it with your hand on virtually every attempt.

Now do the same with just your finger and wrist. Rest your hand on this book, and focus on a single letter not too far from your hand. As before, close your eyes, but this time try to cover the letter with your finger. Again, you will probably find that, so long as the letter is close enough to touch without moving your arm (wrist and finger only), you can cover the letter with your finger almost every time.

Fitts’ Law is about successive corrections, but in fact your arm is accurate, in a single ballistic movement, to the level required by your hand, its effector, and this is also true for your finger and its effector, your fingertip. The corrections are only needed when you have a tool (mouse pointer, pencil, stick) with an end that is smaller than your hand or fingertip.

In other words, Fitts’ Law, a fundamental part of our human nature, is a law of the extended human body.

We really have always been cyborgs.

5.5 The adapted body

When something is simple, people often say, ‘even a child could do it’. For computers and hi-tech appliances this is not really appropriate, as we all know that it is those who are older who most often have problems! However, one can apply a different criterion: ‘could a caveman use it?’. Now by this we do not mean some sort of time machine dropping an iPad into a Neolithic woman’s hands, but rather to recognise that there has not been very much time since the first sophisticated societies, barely 10,000 years, not enough time for our bodies or brains to have significantly evolved. That is, we live in a technological society and learn very different things as we grow up in such a world, yet we still have bodies and brains roughly similar to those of our cave-dwelling ancestors. If we did have a time machine and could bring a healthy orphan baby forward from 10,000 years ago, it is likely that she would grow normally and be no different from a baby born today.

However, while humans were hunter-gatherers 10,000 years ago, homo sapiens as a species had over 100,000 years of development before that, and our species is itself part of a process going back many millions of years. Given this, it seems likely that:

(a) we are well adapted to the world, and

(b) the world we are adapted to is natural, not technological.

James Gibson, a psychologist studying perception and particularly vision, was one of the first to take this into account in the development of what later came to be called ecological psychology. Previous research had considered human vision as a fairly abstract process turning a 2D pattern of colour into a 3D model in the head. However, Gibson saw it as an intimate part of an acting human engaged in the environment. [Gi79]

Gibson argued that aspects of the environment ‘afford’ various possibilities for action. For example, a hollow in a stone affords filling with water, a rock of a certain height affords sitting upon. These possibilities are independent of whether we take advantage of them or whether we even know they exist. An invisible rock would afford sitting upon just as much as a visible one. However, if our minds are bodies are adapted to be part of this environment, then our perceptions will be precisely adapted to recognise and respond to the visual and other sensory effects caused by the affordances of the things in the world. We will be revisiting Gibson and affordance in Chapter 8.

Evolutionary psychologists, whom we mentioned earlier in the context of the ’Swiss Army Knife’ model of specialised intelligences, try to understand how our cognitive systems have evolved and hence what they may be capable of today. The ’social version’ of the Wason card test we saw in Chapter 4 comes from these studies. Reasoning from possible past lifestyles and environments to current abilities is of course potentially problematic, but can also be powerful as a design heuristic. If you are expecting the user of your product or device to have some cognitive, perceptual or motor ability that has no use in a ‘wild’ environment, they are likely to find it impossible to use, or require extensive training.

One example is when we have pauses in a sequence of actions. In the wild, if a sabre tooth tiger appears you run at once, you do not wait for a moment and then run. However, various sports and various user interfaces do require a short pause, for example the short pause needed between selecting a file onscreen and clicking the file name to edit it. Click too fast and the file will open instead. While we can do these actions by explicitly waiting for some indication that it is time for the next action, it is very hard to proceduralise this kind of act–pause–act sequence. It requires much practice in sport, and in the case of file name editing is something that nearly every experienced computer user still occasionally gets wrong.

Another example is the way we can ‘extend’ our [body when driving a car or using a computer mouse. Our ability to work ‘through’ technology like this is quite amazing. A mouse often has different acceleration parameters, may be held at a slight angle so that it does not track horizontally, may even be held upside-down by some left-handed users so that moving the mouse to the left moves the screen cursor to the right. However, after some practice we can achieve the Fitts’ Law behaviour, slickly operating the mouse almost as easily as we point with our fingers. The sense of ‘oneness’ with the technology is evident when things go wrong. Think of driving a car, of the moment when traction is not perfect on a slightly icy road, or when you rev the engine and the car does not accelerate because the clutch is failing. Whether or not the situation is dangerous enough to frighten you, you experience an odd feeling as if it were your own body not responding properly. You and the car are a sort of cyborg [Dx02].

In fact, we go on adapting during our lifetime. One of the great successes of the human is our plasticity: the ability of our brains to change in order to accommodate new situations. We may start off just like a cave-baby, but as adults growing and living in a technological world our minds and bodies become technologically adapted. Brain scans of taxi-drivers show whole areas devoted to spatial navigation far larger than in normal (non-taxi driver) brains. This is not so much physical growth but more that the part dedicated to this function is making use of neighbouring parts of the brain, which would otherwise have been used for other purposes.

Such re-mapping can work very quickly. If a child is born with two fingers joined by a web of skin, brain scans show a single region corresponding to the joined fingers. However, within weeks of an operation to separate the fingers, separate brain areas become evident. Similarly, in experiments where participants had fingers strapped together, the distinct brain areas for the two fingers began to fuse after a few weeks.

In comparison, change in the body takes longer, but of course we know that if we exercise muscles grow stronger and larger. In addition, any activity that requires movement and coordination creates interlinked physical and neurological changes. If you do sports your hand–eye coordination for the relevant limbs and actions will improve.

Dundee University Medical School measures the digital dexterity of new students. Well-controlled hands are especially important in surgery where a slip could cost a life. While individual students would vary, overall dexterity did not vary much from year to year until the mid-2000s, when students (on average) displayed greater dexterity in their thumbs than in the past. Indeed their thumbs now had the same dexterity as index fingers, rather than being a relatively clumsy digit, useful only for grasping. This was attributed to the effect of PlayStation use on a generation.

5.6 Plans and action

One morning Alan was having breakfast. He served himself a bowl of grapefruit segments and then went to make his tea. While making the tea he went to the fridge to get a pint of milk, but after getting the milk from the fridge he only just stopped himself in time as he was about to pour the milk onto the grapefruit!

What went wrong?

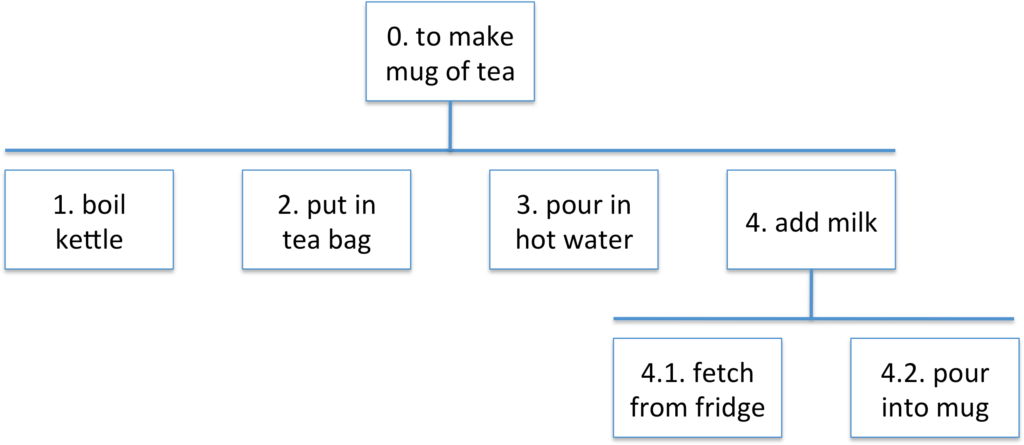

Older models of cognition focused on planned activity. You start with something you want to achieve (a goal), then decide how you are going to achieve it (a plan), and finally do the things that are needed (execution). There are many very successful methods that use this approach. Two of the oldest and most well known in HCI are GOMS (goals, operators, methods and selection) [CM83] and HTA (hierarchical task analysis) [AD67;Sh95]. GOMS is focused on very low-level practised tasks, such as correcting mistakes in typing, whereas HTA looks more at higher-level activity such as booking a hotel room. Making tea is somewhere at the intersection of the two, but we will focus on HTA as it is slightly easier to explain.

?? BOX on NYNEX GOMS analysis to show it can be useful too!HTA basically takes a goal and breaks it down into smaller and smaller sequences of actions (the tasks) that will achieve the goal. The origins of hierarchical task analysis are in Taylorist time-and-motion studies for ’scientific management’ of workspaces, decomposing repetitive jobs into small units in the most ‘efficient’ way. Later, during the Second World War, new recruits had to be trained how to use relatively complex equipment, for example stripping down a rifle. Training needed to be done quickly in order to get them onto the battlefield, yet in such a way that under pressure they could automatically do the right actions. Hierarchical task analysis provided a way to create the necessary training materials and documentation.

While HTA was originally developed for ‘work’ situations, it can be applied to many activities. Figure 5.9 shows an HTA for the task for making a mug of tea (with a teabag, not proper tea from a teapot!). The diagram shows the main tasks and sub-tasks.

The task hierarchy tells you what steps you need to do, and in addition there is a plan saying in what order to do the steps. For example, for task 4 the plan might be:

Plan 4. if milk not out of fridge do 4.1 then do 4.2

If you follow the steps in the right order according to the plan, then you get a mug of tea. Easy — but why did it go wrong with the grapefruit?

You might have noticed that the world of task analysis is very close to an open-control system. Not entirely, since the plan for Task 4 includes some perception of the world (if milk not out of fridge), but predominantly it is a flow of command and control from inside to out. It assumes that we keep careful track in our heads of what we are doing and translate this into action in the world, but if this were always the case, why the near-mistake with the grapefruit?

One explanation is that we simply make mistakes sometimes! However, not all mistakes are equally likely. One would be unlikely to pour milk onto the bare kitchen worktop or onto a plate of bacon and eggs. The milk on the grapefruit is a form of capture error: the bowl containing the grapefruit might on other occasions hold cornflakes. When that is the case and you are standing in the kitchen with milk in your hand, having just got it out of the fridge, it is quite appropriate to pour the milk into the bowl.

Staying close to the spirit of HTA we can imagine a plan for making tea that is more like:

if milk not out of fridge do 4.1 when milk in hand do 4.2

However, if we were to analyse ‘prepare bowl of cornflakes’, we would have a rule that says:

when milk in hand pour into bowl

So when you have milk in your hand, what is the ‘correct’ thing to do, pour into a mug or pour into a bowl? One way is to remember what you are in the middle of doing, but the mistake suggests that actually Alan’s actions were driven by the environment. The mug on the worktop says ‘please fill me’, but so also does the cereal bowl. Alan was acting more in a stimulus–response fashion than one based on pre-planned actions.

Preparing breakfast is a practised activity where, if anywhere, forms of task analysis should work. In more complex activities many argue that it is a far too simplistic view of the world. Nowadays most people using such methods would regard task analysis as a useful, but simplified model. However, in the mid-1980s, when HCI was developing as a field, the dominant cognitive science thinking was largely reductionist. A few works challenged this, most influentially Lucy Suchman’s Plans and Situated Actions [Su87]. Suchman was working in Xerox and using ethnographic techniques borrowed from anthropology. She observed that when a field engineer tried to fix a malfunctioning photocopier, they did not go through a set process or procedure, but instead would open the photocopier and respond to what they saw. To the extent that plans were used, they were adapted and deployed in a situated fashion driven by the environment: what they saw with their eyes in the machine.

Cognitive scientists have developed their own responsive methods of problem-solving analysis where tasks can be adapted based on what is encountered in the environment. In artificial intelligence new problems are often tackled using means–ends analysis. Beginning with a goal (e.g. make tea), one starts to solve it, but becomes blocked by some impasse (no milk), which then gives rise to a sub-goal (get milk from fridge). However, this still does not capture the full range of half-planned, half-recognised activities we see in day-to-day life.

In reality, we have many ways in which individual actions are strung together: some we are explicitly aware of doing, others are implicit or sub-conscious. Some involve internally driven pre-planned or learned actions, others are driven by the environment (fig. 5.10). It is often hard to tell which is at work by watching a user, and hard even when we introspect, since once we think about what we are doing we tend to change it! This is why errors like the grapefruit bowl are so valuable as they often reveal what is going on below our level of awareness.

| internally driven | environment driven | |

|---|---|---|

| explicit | (a) following known plan of action |

(b) situated action, means–ends analysis |

| implicit | (c) proceduralised or routine action |

(d) stimulus–-response reaction |

Fig. 5.10 types of activity (from [Dx08])

In most activities there will also be a mix of these tyes of activity and, over time, frequent environment-driven actions (b) are thoroughly learned and end up being proceduralised or routine actions (c) — practice makes perfect. Anyone who has practised a sport or music will have found this for themselves.

Box 5.2 Iconic case study: Nintendo Wii

When Nintendo launched the Wii in 2006, they created a new interaction paradigm. Like the iPhone that followed it, the Wii allowed us to bring learned gestures and associations from our day-to-day physical world into our physical–digital interactions. A two-part controller translated body movement into gaming actions, and in doing so opened computer gaming up to a completely new market. The Microsoft Kinect took the Wii concept further by literally using the gamers’ bodies as controllers. The Wii/Kinect revolution brought with it a lot of interesting case material on how we perceive physical space. Consider for example the case of two players standing side by side playing Kinect table tennis. One serves diagonally to the other across the net. If we assume, for example, that the player ‘receiving’ is right-handed and on the lefthand side of the court (from their perspective), will they be forced to receive the ball ‘backhanded’?

https://static.daniweb.com/attachments/0/KinectSports_Table_Tennis_%282%29.jpg (SG modified)

5.7 The embodied mind

Like our perception, which is intimately tied to the physical world, our cognition itself is expressed physically. When we add up large numbers we use a piece of paper. When we solve a jigsaw puzzle we do not just stare and then put all the pieces in place, we try them one by one. Researchers studying such phenomena talk about distributed cognition, regarding our cognition and thinking to be not only inside our head, but distributed between our head, the world and often other people [HH00;Hu95]. Early studies looked at Micronesian sailors, navigating without modern instruments for hundreds of miles between tiny islands. They found that no single person held the whole navigation in their head, but it was somehow worked out between them [Hu83;Hu95].

More radically still, some philosophers talk about our mind being embodied, not just in the sense of being physically embodied in our brain, but in the sense that, all together, our brain, body and the things we manipulate achieve ‘mind-like’ behaviour [Cl98]. If you are doing a sum on a piece of paper, the paper, the pencil and your hand are just as much part of your ‘mind’ as your brain.

If you think that is farfetched, imagine losing your phone: where are the boundaries of your social mind?

Theorists who advocate strong ideas of the embodied mind would argue that we are creatures fitted most well to a perception–-action cycle and tend to be parsimonious with mental representations, allowing the environment to encode as much as possible.

“In general evolved creatures will neither store nor process information in costly ways when they can use the structure of the environment and their operations on it as a convenient stand-in for the information-processing operations concerned.” ([Cl89] as quoted in [Cl98])

Clark calls this the ‘007 principle‘ as it can be summarised as: ”know only as much as you need to know to get the job done‘ [Cl98].

In the natural world this means, for example, that we do not need to remember what the weather is like now, because we can feel the wind on our cheeks or the rain on our hands. In a more complex setting this can include changes made to the world (e.g. the bowl on the worktop) and even changes made precisely for the reason of offloading information processing or memory (e.g. ticking off the shopping list). Indeed this is one of the main foci of distributed cognition accounts of activity [HH00].

It is not necessary to take a strong ’embodied mind’ or even ‘distributed cognition’ viewpoint to see that such parsimony is a normal aspect of human behaviour — why bother to remember the precise order of actions to make my mug of tea when it is obvious what to do when I have milk in my hand and black tea in the mug?

Of course parsimony of internal representation does not mean that there is no internal representation at all. The story of the grapefruit bowl would be less amusing if it happened all the time. While eating breakfast it is not unusual to have both a grapefruit bowl and a mug of tea out at the same time, but Alan had never before tried to pour milk on the grapefruit. As well as the reactive behaviour ‘when milk in hand pour in cup’, there is also some practised idea of what follows what (plan) and some feeling of being ‘in the middle of making tea’ (context, schema).

Parsimony cuts both ways. If it is more efficient to ‘store’ information in the world we will do that. If it is more efficient to store it in our heads then we do that instead. Think of an antelope being chased by a lion. The antelope does not constantly run with its head turned back to see the lion chasing after it. If it did it would fall over or crash into a tree. Instead it just knows in its head that the lion is there, and keeps running.

A rather more everyday example involves workers in a busy coffee bar at Steve’s university. Two people work together at peak times: one takes the order and the money while the other makes and serves the coffee. Orders can be taken faster than fresh coffee can be made and so there is always a lag between taking and fulfilling the order. There are a lot of combinations: four basic types of coffee order (espresso — latte); four possible cup sizes; one or two coffee shots; no milk or one of three types of milk as well as 12 types of optional flavoured syrups. Then there is the sequence in which the order is to be fulfilled: customers get upset if they are made to wait while the person behind is served.

The staff devised the following solution: the person taking the order selects the appropriate paper cup (large, medium, small or espresso). They turn it upside down and write the order on the base. This creates a physical association between the cup and the order that simultaneously takes care of the size of the coffee and the exact type of coffee to go in it. The cups are then ‘queued’ on the counter in the order in which they are to be made, with the most immediate order being closer to the staff member making the coffee. The end result is that a great deal of information is efficiently dealt with through physical associations and interactions.

Box 5.3 External cognition: lessons for design

In design this means we have to be aware that:

(i) people will not always do things in the ‘right order’

(ii) if there are two things with the same ‘pre-condition’ (e.g. milk in hand), then it is likely that a common mistake will be to do the wrong succeeding action

(iii) we should try to give people cues in the environment (e.g. lights on a device) to help them disambiguate what comes next (clarifying context)

(iv) where people are doing complex activities we should try to give them ways to create ‘external representations’ in the environment

References

1. [AD67] Annett, J. and Duncan, K. D. (1967) Task analysis and training design. Occupational Psychology 41, 211-221. [5.6]

2. [CM83] Card, Stuart; Thomas P. Moran and Allen Newell (1983). The Psychology of Human Computer Interaction. Lawrence Erlbaum Associates. ISBN 0-89859-859-1. [5.6]

3. [Cl89] Clark, A.: Microcognition,: Philosophy, Cognitive Science and Parallel Processing. MIT Press, Cambridge, MA (1989) [5.7]

4. [Cl98] Clark, A. (1998). Being There: Putting Brain, Body and the World Together Again. MIT Press. [5.7;5.7;5.7]

5. [Dx08] A. Dix (2008). Tasks = data + action + context: automated task assistance through data-oriented analysis. keynote at Engineering Interactive Systems 2008 , (incorporating HCSE2008 & TAMODIA 2008), Pisa Italy, 25-26 Sept. 2008 . http://www.hcibook.com/alan/papers/EIS-Tamodia2008/ [5.6]

6. [Dx02] Dix, A. (2002). Driving as a Cyborg Experience. accessed Dec 2012, http://www.hcibook.com/alan/papers/cyborg-driver-2002/ [5.5]

7. [Dx03] Dix, A. (2003/2005). A Cybernetic Understanding of Fitts’ Law. HCIbook online! http://www.hcibook.com/e3/online/fitts-cybernetic/ [5.4]

8. [Fi54] Paul M. Fitts (1954). The information capacity of the human motor system in controlling the amplitude of movement. Journal of Experimental Psychology, volume 47, number 6, June 1954, pp. 381-391. (Reprinted in Journal of Experimental Psychology: General, 121(3):262–269, 1992). [5.4]

9. [Gi79] Gibson, J. (1979). The Ecological Approach to Visual Perception. New Jersey, USA, Lawrence Erlbaum Associates [5.2;5.5]

10. [HH00] Hollan, J., E. Hutchins and D. Kirsh (2000) ‘Distributed cognition: toward a new foundation for human-computer interaction research’. ACM transactions on computer-human interaction, 7(2), 174-196. [5.7;5.7]

11. [Hu83] Hutchins E. Understanding Micronesian navigation. In D. Gentner & A. Stevens (Eds.), Mental models. Hillsdale, NJ: Lawrence Erlbaum, pp 191-225, 1983. [5.7]

13. [IS00] International Standard (2000). ISO 9241-9, “Ergonomic requirements for office work with visual display terminals– Part 9: Requirements for non-keyboard input devices” [5.4]

14. [Ja84] Laird, J. (1984). The Real Role of Facial Response in the Esperience of Emotion: A Reply to tourangeau and Ellsworth, and Others. Journal of Personality and Social Psychology. 1984, Vol 47, No 4, 909-917. [5.3]

15. [Ma87] Matsumoto, D. (1987). The role of facial response in the Experience of Emotion: More Methodological Problems and a Meta-Analysis. Journal of Personality and Social Psychology. April 1987, vol 52, No4, 769-774. [5.3]

16. [Sh95] Shepherd, A. (1995). Task analysis as a framework for examining HCI tasks, In Monk, A. and Gilbert N. (Eds.) Perspectives on HCI: Diverse Approaches. 145–174. Academic Press. [5.6]

17. [Sh48] Shannon, C. (1948). A Mathematical Theory of Communication. Bell System Technical Journal 27 (July and October): pp. 379–423, 623–656. http://plan9.bell-labs.com/cm/ms/what/shannonday/shannon1948.pdf. [5.4]

18. [SW63] Shannon, C. and W. Weaver (1963). The Mathematical Theory of Communication. Univ. of Illinois Press. ISBN 0252725484. [5.4]

19. [Su87] Suchman, L. (1987). Plans and Situated Actions: The problem of human–machine communication. Cambridge University Press, [5.6]